# Pure Web Video Editing

# Introduction

The WebCodecs API provides audio and video codec capabilities for the Web platform, making it possible to implement efficient, professional video editing products on the Web platform (browser, Electron).

Readers can refer to the author's introductory series (opens new window) for more detailed information, or directly use the WebAV (opens new window) open-source project to create/edit audio and video files in the browser.

# Background & Solutions

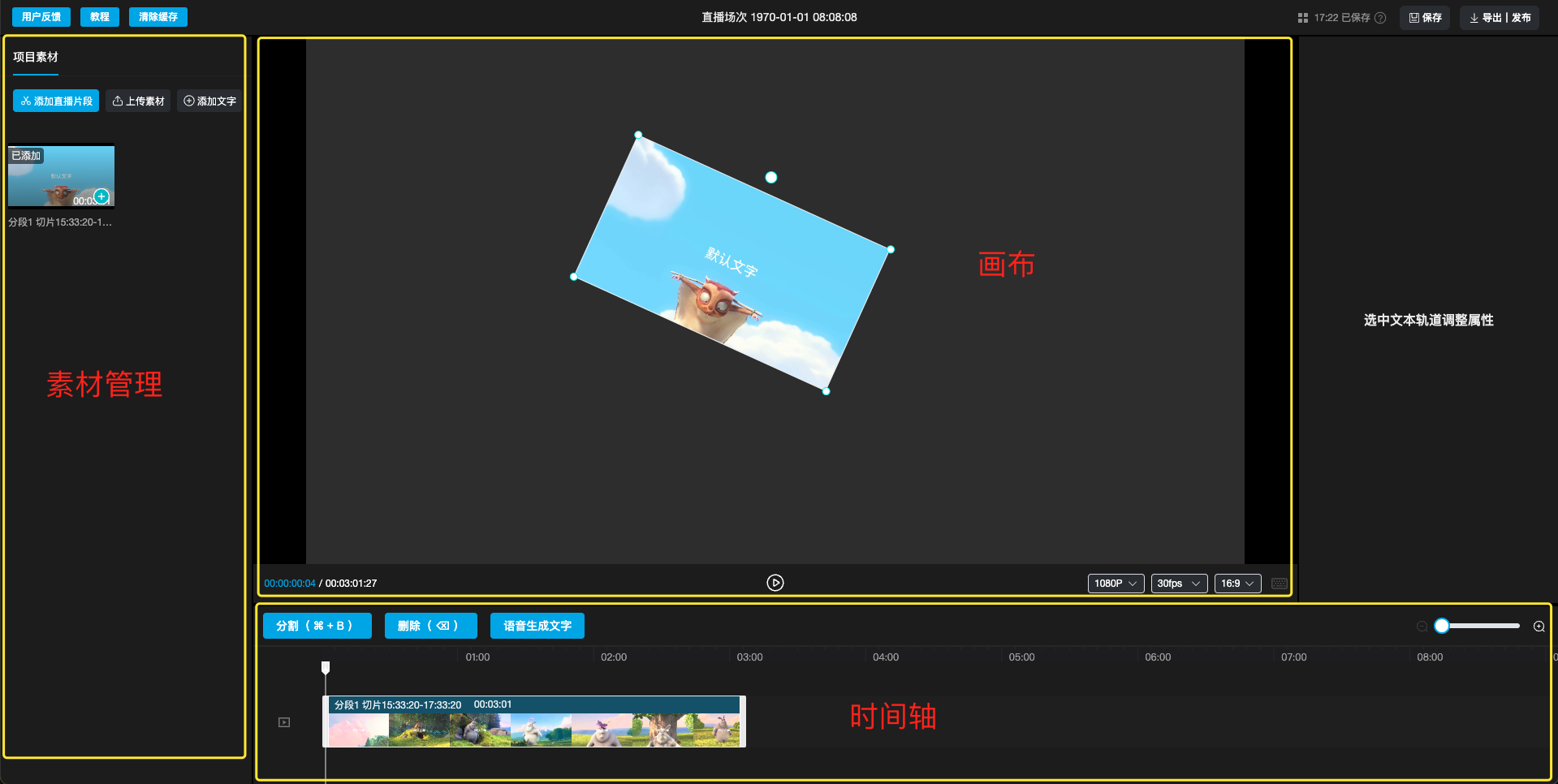

To address the need for simple video editing in live streaming content submission scenarios, we needed to develop a lightweight video editing product. This allows users to complete the live streaming - editing - submission workflow online.

Current video editing solutions on the Web platform include:

- Cloud-based: Web UI for editing, with user operations synchronized to cloud for processing

- ffmpeg.wasm: Compiling ffmpeg to WebAssembly for in-browser editing

- WebCodecs: Using WebCodecs API for video data encoding/decoding, combined with Web APIs and third-party libraries

| Cloud | ffmpeg.wasm | WebCodecs | |

|---|---|---|---|

| Cost | Poor | Good | Good |

| Ecosystem | Good | Medium | Poor |

| Extensibility | Medium | Poor | Good |

| Compatibility | Good | Good | Poor |

| Performance | Medium | Poor | Good |

# Solution Analysis

The WebCodecs solution offers clear advantages in cost and extensibility, though its ecosystem maturity and compatibility are somewhat lacking.

Cloud-based solutions are currently mainstream; with sufficient project budget, they can complement WebCodecs well.

ffmpeg.wasm is impractical due to poor performance.

WebCodecs Solution

Advantages

- Cost: Requires minimal Web development to implement frontend editing features, reducing both development costs and technical complexity, with no server running or maintenance costs

- Extensibility: Easy integration with Canvas and WebAudio for custom functionality

Disadvantages

- Ecosystem Maturity: Lacks readily available transitions, filters, effects, and supports limited container formats

- Compatibility: Requires Chrome/Edge 94+ (approximately 10% of users incompatible)

Given the product positioning (lightweight editing tool) and user characteristics (streamers), these drawbacks are acceptable because:

- Ecosystem immaturity is a development cost issue rather than a capability limitation; existing features meet current product needs

- Compatibility is a temporal issue; proper prompting can guide users to upgrade their browsers

For WebCodecs performance and optimization insights, read this article (opens new window).

Based on the above, we chose the WebCodecs solution.

# Feature Analysis

Developing a video editing product requires three steps:

- Implement asset management module

- Implement canvas module

- Implement timeline module

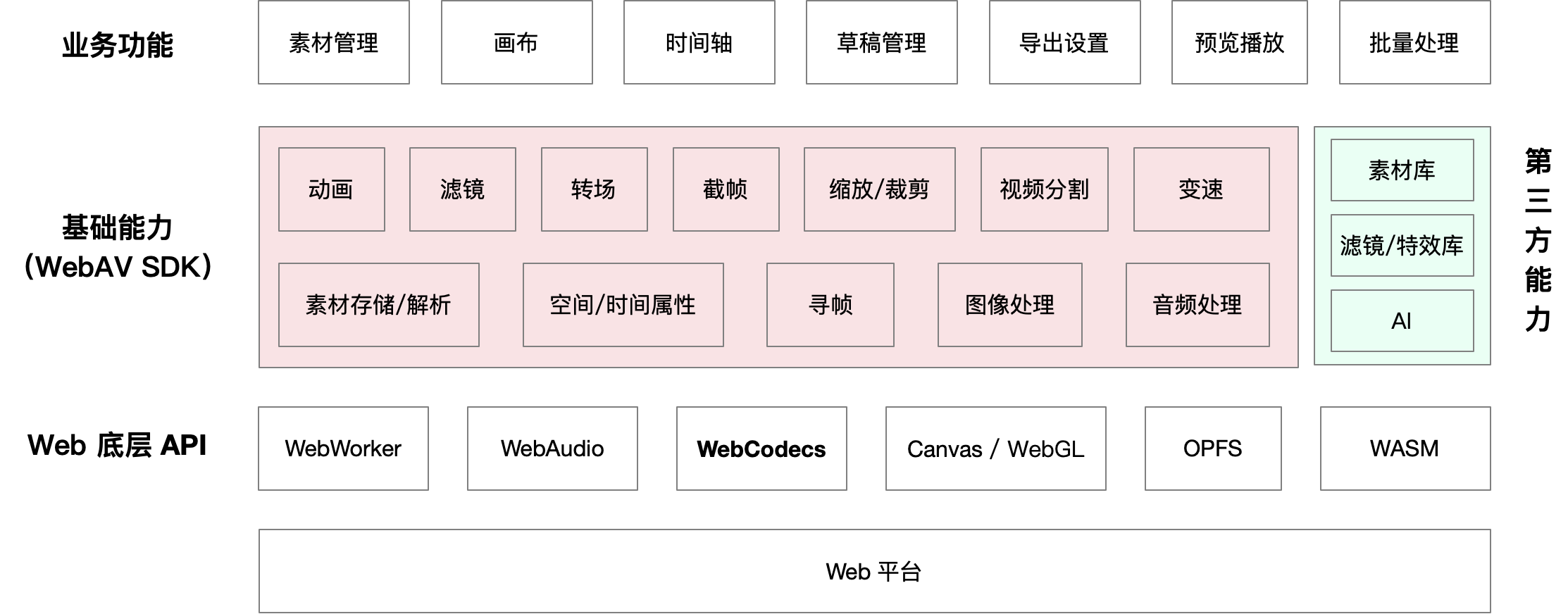

Each module contains numerous sub-features like asset management, thumbnails, preview playback, which can be broken down into fundamental capabilities and implemented using Web platform APIs.

Let's explore these fundamental capabilities' implementation principles, mastering which enables building complete editing functionality.

# Implementing Core Capabilities

# Asset Loading and Storage

Audio and video assets are typically large, incurring time and bandwidth costs for upload and download.

While the Web platform previously had many file read/write limitations, the OPFS API now significantly improves user experience.

OPFS (Origin Private File System) provides each website with private storage space, allowing Web developers to create, read, and write files without user authorization, offering better performance compared to user space file operations.

For details, read Web File System (OPFS and Tools) Introduction (opens new window)

# Asset Parsing

We know that video consists of a series of images

Raw audio and video data is enormous; for efficient storage and transmission, it needs to be compressed and packaged into common media file formats.

- Image frames are compressed in groups (temporally adjacent images are often very similar, achieving higher compression ratios)

- Multiple compressed frame groups plus metadata (codec, duration, subtitles, etc.) form the audio/video file

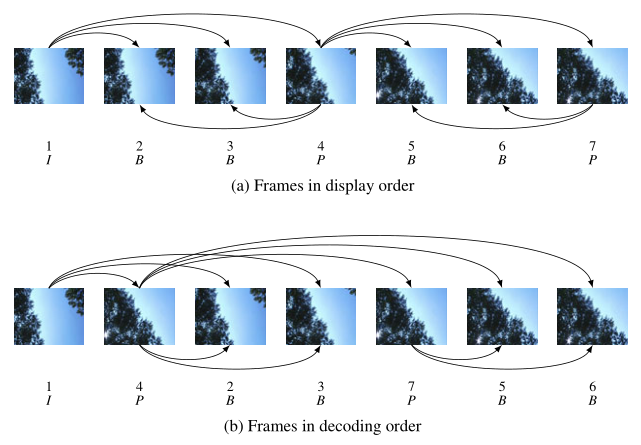

Compressed image frames are categorized into I/P/B types, with I-frames and subsequent P/B frames forming a Group of Pictures (GOP).

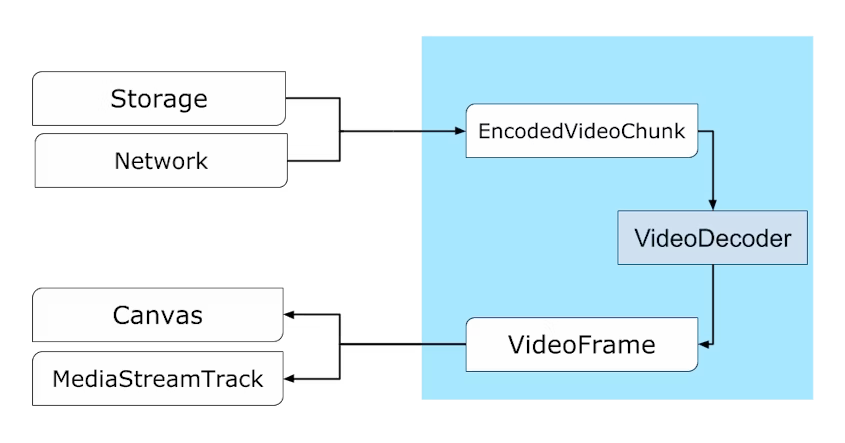

The first step in processing audio/video data is file parsing to obtain raw data, which follows this reverse process:

Video File -> Demux -> Compressed Frames -> Decode -> Raw Image Frames

- Use third-party libraries (like mp4box.js) to demux video files, obtaining compressed frames

- Use WebCodecs API to decode compressed frames into raw image frames

EncodedVideoChunk (compressed frame) can be converted to VideoFrame (raw image frame) through VideoDecoder

Learn more in Parsing Video in the Browser (opens new window)

# Video Frame Seeking and Traversal

Since video files are typically large, loading them entirely into memory is impractical. Instead, data is read and decoded from disk as needed.

For example, to add a watermark to video frames between 10-20s:

- Locate frames between 10-20s in the file

- Read and decode corresponding frames from disk to get raw images

- Draw text on the images, then re-encode to generate new compressed frames

This shows that frame seeking and streaming traversal is the first step in audio/video processing.

As mentioned earlier, video frames are grouped with different types within groups, so seeking and decoding must follow a specific order.

Image frame sequence (display order) showing the relationship between frame types and decoding order, note the numbers and frame types.

Read more about The Relationship Between I/P/B Frames, GOP, IDR, and PTS, DTS (opens new window)

# Image Processing

With the above knowledge, we can now freely read or traverse all image frames in a video file.

Simple image processing, like drawing new content (text, images) on original images or applying basic filters, can be implemented using Canvas API.

Complex image processing, such as green screen keying, effects, custom filters, requires WebGL Shader code.

WebGL Shader code runs on the GPU, efficiently processing all pixel values in each frame concurrently.

# Spatial and Temporal Properties

Spatial properties refer to asset coordinates, size, and rotation angle

When exporting video, we can create animation effects by dynamically setting spatial properties of image frames based on time.

For example, for an image asset's translation animation (0s ~ 1s, x-coordinate 10 ~ 100), at 0.5s the frame coordinate would be x = (100 - 10) * (0.5 / 1).

Temporal properties refer to asset time offset and duration in the video

These two properties describe an asset's position on the video timeline.

When assets support spatial and temporal properties, we can not only programmatically set properties for animations but also enable user control (drag, scale, rotate, etc.) through mouse operations.

# Conclusion

- Understanding these fundamental capabilities' principles, plus some patience and time, enables implementing most video editing features

- These capabilities extend beyond editing to client-side batch video processing, live streaming, and enhanced playback scenarios

- Audio/video processing on the Web platform involves many details and requires numerous APIs. Continue exploring through our article series (opens new window) and open-source WebAV SDK (opens new window).

# Appendix

- Web Audio & Video Introductory Series (opens new window)

- WebCodecs Performance and Optimization Insights (opens new window)

- WebAV (opens new window): SDK for creating/editing audio and video files on the Web platform

- Parsing Video in the Browser (opens new window)

- High-Performance Video Frame Capture Based on WebCodecs (opens new window)

- Web File System (OPFS and Tools) Introduction (opens new window)

- Relationship Between I-frames, P-frames, B-frames, GOP, IDR, and PTS, DTS (opens new window)