# Web Audio & Video (1) Fundamentals

Before diving into the subsequent articles or starting to use WebAV for audio and video processing, it's essential to understand some background knowledge.

This article provides a brief introduction to the fundamental concepts of audio and video, as well as the core APIs of WebCodecs.

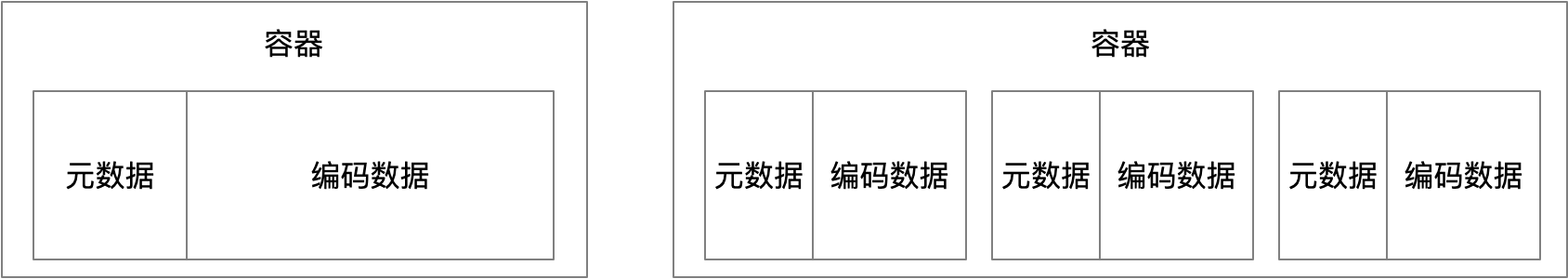

# Video Structure

A video file can be thought of as a container that holds metadata and encoded data (compressed audio or video);

Different container formats have various distinctions in how they organize and manage metadata and encoded data.

# Codec Formats

The primary purpose of encoding is compression. Different codec formats represent different compression algorithms;

This is necessary because raw sampled data (images, audio) is too large to store or transmit without compression.

Different codec formats vary in their compression ratio, compatibility, and complexity;

Generally, newer formats offer higher compression rates but come with lower compatibility and higher complexity;

Different business scenarios (VOD, live streaming, video conferencing) require different trade-offs between these three factors.

Common Video Codecs

- H264 (AVC), 2003

- H265 (HEVC), 2013

- AV1, 2015

Common Audio Codecs

- MP3, 1991

- AAC, 2000

- Opus, 2012

# Container (Muxing) Formats

Encoded data is compressed raw data that requires metadata for proper parsing and playback;

Common metadata includes: timing information, codec format, resolution, bitrate, and more.

MP4 is the most common and best-supported video format on the Web platform, which is why our subsequent example programs will work with MP4 files.

MP4 containing AVC (video codec) and AAC (audio codec) offers the best compatibility

Other Common Formats

- FLV: flv.js primarily works by remuxing FLV to fMP4 (opens new window), enabling browsers to play FLV format videos

- WebM: free format, output by MediaRecorder (opens new window)

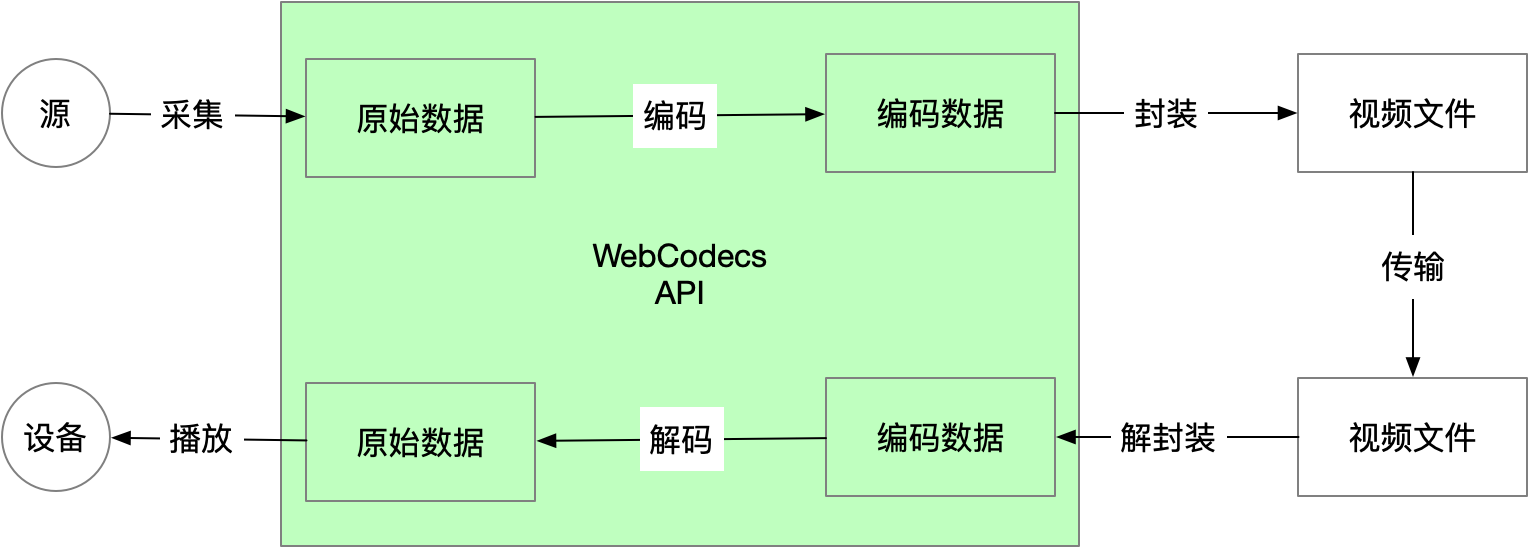

# WebCodecs Core APIs

As shown above, WebCodecs operates at the encoding/decoding stage, not involving muxing or demuxing

The relationship between diagram nodes and APIs:

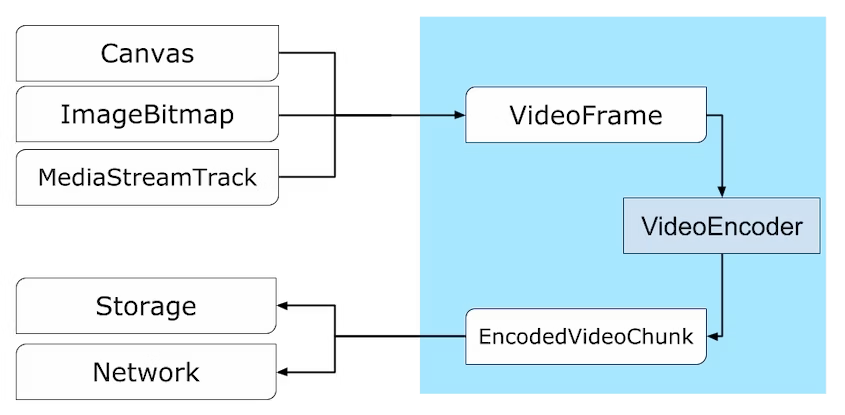

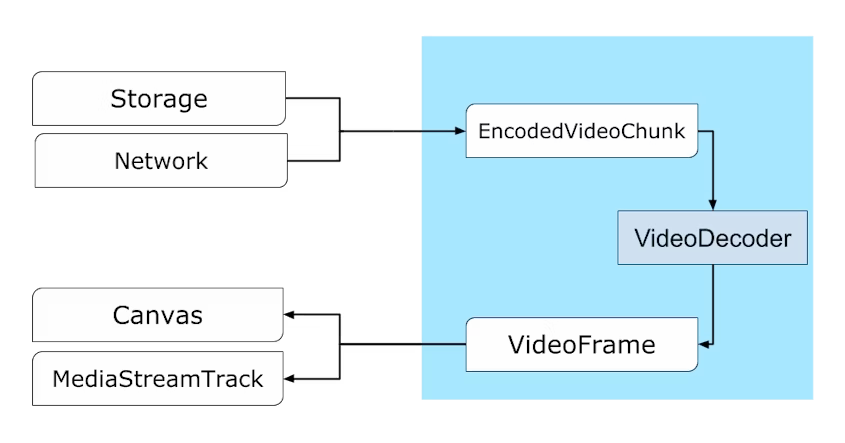

Video

- Raw image data: VideoFrame (opens new window)

- Image encoder: VideoEncoder (opens new window)

- Compressed image data: EncodedVideoChunk (opens new window)

- Image decoder: VideoDecoder (opens new window)

Data transformation flow:

VideoFrame -> VideoEncoder => EncodedVideoChunk -> VideoDecoder => VideoFrame

Audio

- Raw audio data: AudioData (opens new window)

- Audio encoder: AudioEncoder (opens new window)

- Compressed audio data: EncodedAudioChunk (opens new window)

- Audio decoder: AudioDecoder (opens new window)

*Audio data transformation is symmetrical to video*

This symmetry between encoding/decoding and audio/video makes WebCodecs easier to understand and master, which is one of its design goals.

Symmetry: have similar patterns for encoding and decoding

# WebCodecs API Considerations

Here are some common pitfalls that newcomers should be aware of:

- VideoFrames can consume significant GPU memory; close them promptly to avoid performance impact

- VideoDecoder maintains a queue; its output VideoFrames must be closed timely, or it will pause outputting new VideoFrames

- Regularly check encodeQueueSize (opens new window); if the encoder can't keep up, pause producing new VideoFrames

- Encoders and decoders need to be explicitly closed (e.g., VideoEncoder.close (opens new window)) after use, or they might block other codec operations

# Appendix

- WebAV (opens new window) Audio & video processing SDK built on WebCodecs

- VideoFrame (opens new window), AudioData (opens new window)

- VideoEncoder (opens new window), VideoDecoder (opens new window)

- AudioEncoder (opens new window), AudioDecoder (opens new window)

- EncodedVideoChunk (opens new window), EncodedAudioChunk (opens new window)